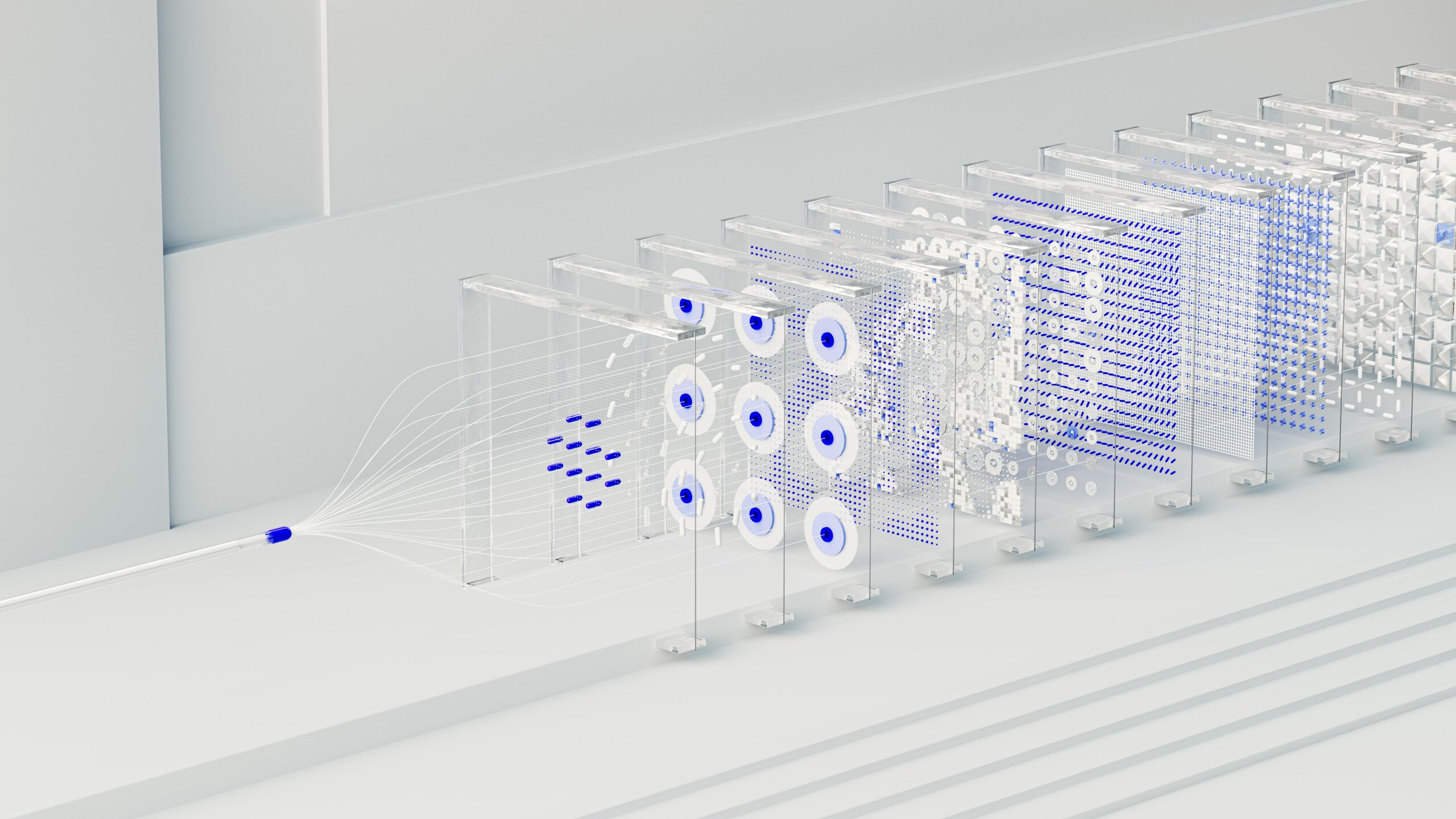

Supercomputing Cluster

Leverage the power of a supercomputing cluster to enable your LLM to seamlessly handle models with tens or hundreds of billions of parameters. High memory bandwidth and capacity are critical for efficient training and inference, ensuring faster results than ever before.

Machine Learning

Harness the power of artificial intelligence to reveal hidden insights, automate complex tasks, and continually enhance performance. Our machine learning platform simplifies the development and large-scale deployment of intelligent models.

Inference

Gain deeper insights from your data through high-performance inference capabilities. Our service manages model deployment, scaling, and low-latency predictions, ensuring enterprise-grade reliability.

Generative Pre-trained AI

Engage customers naturally with content generated by pre-trained AI models.

High-Performance Computing

Accelerates HPC tasks in scientific research and industrial applications, including weather forecasting, drug discovery, and quantum computing.

Deep Learning and Large Language Models

Handles extensive parameters for deep learning, particularly in training and inference of large language models, supporting tasks such as text analysis, language translation, and content creation.

Generative AI

Ideal for generative AI applications such as VR, entertainment, medical imaging, and drug design, leveraging deep learning to create new data including images, videos, audio, and text.

Superior networking architecture

Our HGX H200 distributed training clusters are built with a rail-optimized design using NVIDIA Quantum-2 InfiniBand networking supporting in-network collections with NVIDIA SHARP, providing 3.2Tbps of GPUDirect bandwidth per node.

Pre-Configured Flavours

Enhance the efficiency of any GPU-accelerated task using preconfigured options or tailor custom configurations to meet your specific requirements.

Managed Kubernetes

Managed Kubernetes containers deliver high performance without infrastructure hassles. Enjoy rapid instance provisioning and responsive auto-scaling across thousands of GPUs.

Enterprise AI

Ideal for scaled infrastructure supporting the largest, most complex or transformer-based AI workloads, such as large language models, Vision AI and more.

Quick Deployment

Deploy GPU instances based on containers, launching within seconds, using both public and private repositories.

High-Performance Storage

Fault-tolerant cloud storage with triple replication. You can easily adjust volumes for optimized IOPS and superior performance.